Determinism in League of Legends: Implementation

Taking the League of Legends game server from a nondeterministic process to its current state required almost a full year of effort from multiple engineers. As a side benefit of this effort, we holistically improved the codebase’s robustness, discoverability, and maintainability. We removed swaths of dead or redundant code and created new opportunity spaces for gameplay exploration. We also created powerful new tools that allow us to record, play back, and validate real-world League of Legends games, enabling new opportunities for optimizing and testing code changes. Most importantly, we delivered a functional basis for the Deterministic Disaster Recovery (DDR) toolset, which is, as of this writing, deployed in an early form in the NA LCS.

In this post, I’ll dig into the implementation of determinism in League. For more on the problem we were solving, check out the intro to this series.

Defining Determinism

A process is said to be deterministic when, given a fixed set of inputs, it always produces the same outputs. Consider an example of determinism in simple algebra:

The equation:

f(x) = x + 1

...is deterministic.

If one substitutes the number 1 for x in this equation, f(1) will always equal 2.The good news is that most programming languages, computer hardware, and software designs are already deterministic by nature. When software fails to behave in a deterministic fashion, we call the event a divergence. A divergence can be discovered by comparing the runtime state of a game (some portion of the game data stored in memory) between multiple executions with fixed inputs.

Computer software is designed to be free of divergences. C++, x86 hardware, flat files, and executable code are already deterministic in the ways we care about, so we may infer that any divergences encountered are caused by unexpected inputs. By extrapolating, we can arrive at the most important guideline for achieving determinism: software divergences are the product of unexpected inputs.

Validating Determinism

To validate that our systems are deterministic, we needed to be able to reliably measure and compare outputs over multiple executions with the same inputs. Divergences in games are particularly troublesome when attempting to build a fully deterministic system. The iterative nature of the frame update loop means that a simulation encountering a divergence will now feed that bad state back into the simulation, typically causing the game to progressively diverge and eventually break down into chaos. The goal of determinism validation is to obtain the root cause of the divergence.

Making 100% of the working set of our game server deterministic was not feasible. There were technical complications that made it nearly impossible to achieve 100% memory snapshot parity between runs. Instead, we focused on the gameplay-essential state that governs the critical interactions between the players and the game. We didn’t need to make elements like our outgoing network traffic deterministic, as we envisioned a DDR workflow in which the clients would not be connected to the server during disaster recovery.

Our team settled on a goal that we dubbed gameplay deterministic to emphasize the fact that there was some state that was not useful for DDR or general feature development and could remain nondeterministic.

Identifying Inputs

We previously established that LoL’s divergences are the result of unexpected inputs, but there remained the task of identifying the sources of those inputs.

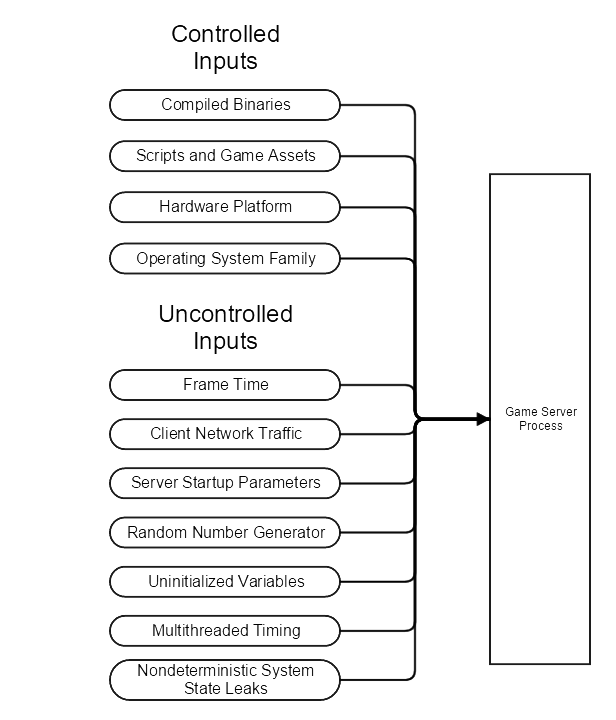

We started by organizing the well-understood categories of inputs into lists: controlled and uncontrolled. Controlled inputs are easy to understand: they are inputs that never change between executions of the same game version. Uncontrolled inputs describe any input into the LoL server that was either noisy, random, or generated from player inputs.

Our initial list of uncontrolled inputs looked something like this:

Recording Inputs

Uncontrolled inputs fell into one of two categories: those that could be eliminated and those that could be recorded and played back. Our specific disaster recovery scenario supported recording a game and playing back to a point in time before the emergence of a bug, so recordings became a natural solution to many issues. For the most part, our uncontrolled inputs were things that we want to be uncontrolled, such as the actions a player takes or the starting configuration of a game.

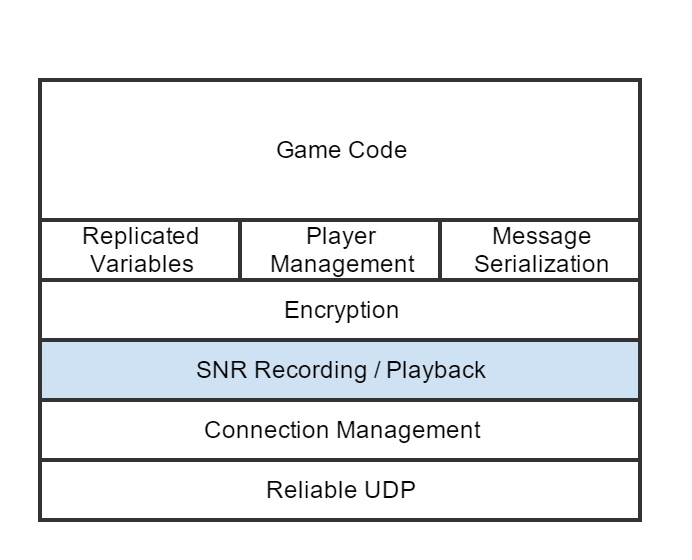

Given our usage scenarios, the optimal path was to simply record network inputs each frame in the order in which they’re received by the server. This led to the development of one of our key pieces of determinism functionality, the Server Network Recording (SNR). The SNR was later extended to include all of the uncontrolled inputs to the game that we couldn’t simply remove.

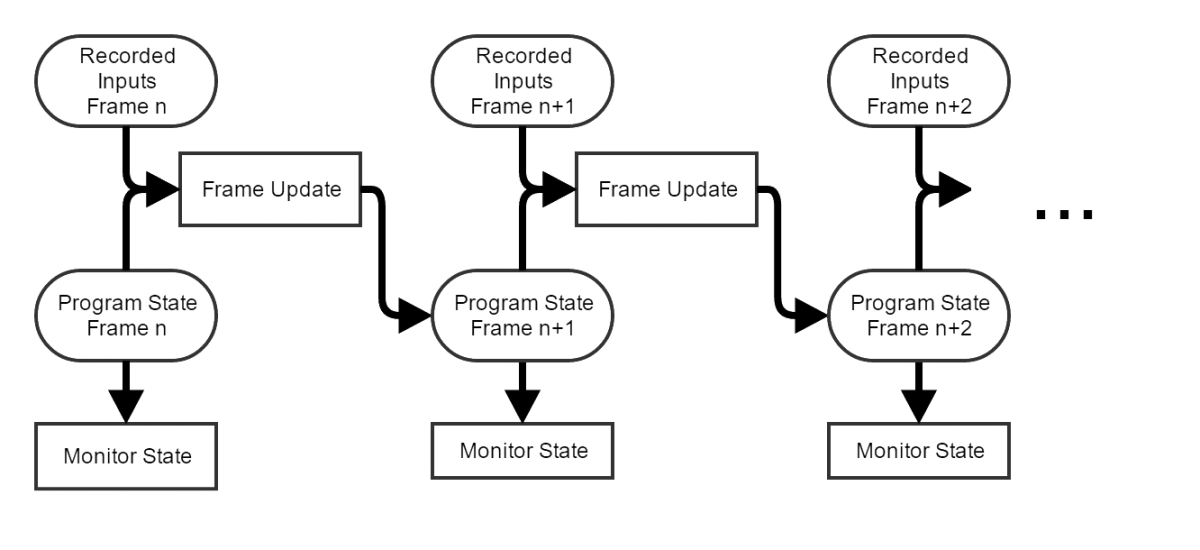

A critical simplification that we made up-front was that SNRs would record and play back the state of the game a single frame at a time. This led to numerous conventions and simplifications that allowed us to deliver gameplay server determinism in a reasonable amount of time.

The above is what the execution flow looks like. Quantization of the game simulation is a convenient way to create entry points for monitoring, playback, and recording within the game loop. It also means that temporary state during the frame update does not necessarily need to be 100% deterministic, which saves a huge amount of time trying to fix divergent execution within code blocks that have no long-term consequences.

Taking Control of Inputs

Time

The League game server code had a glaring complication that had emerged in its rapid growth during early development: it contained 6 completely different clock and timing APIs. Each individual clock or timer impacted a separate set of game or engine systems. Many also lacked critical functionality which would make them suitable for determinism. From the start of the project, we identified this as the single largest barrier to making the game server deterministic.

The first major element we implemented as part of our determinism goals was to unify our game clocks into a single implementation with as few global clock instances as possible. This yielded the Unified Clock effort, which was a project large enough to warrant it’s own blog post. I won’t go into too many details here, but the entire effort spanned nearly six months and required major refactors of numerous systems.

We also encountered numerous places where sub-frame timings were being used in ways that could affect gameplay state. Since we were optimizing for per-frame quantization of state anyways, calls to acquire instantaneous process time had to be mostly eliminated.

The worst offenders were long-frame-prevention checks, which could early-exit a batch of game object updates and load balance them over multiple frames. This was a sensible implementation early on in the development of LoL when there were still a lot of unknowns, but is no longer a desirable pattern. Removing these throttles turned out to be benign, as continuous optimization of the LoL codebase meant that this load balancing is rarely used in practice.

When the dust settled from the refactor, we had an important decision to make: do we make the Unified Clock run at a fixed step, or do we play it safe and record the per-frame clock timings into the SNR? League of Legends does not use a fixed-step server clock, but rather a measured frame delta time which we throttle to a target frame rate. This makes the server more resilient to system-wide performance spikes, but this pattern definitely complicated determinism.

We played it safe by retaining the variable frame delta times, which in turn helped us deliver DDR in a shorter timeframe. However, this is something I’d like to explore further in the future. With a fixed timestep, we may be able to improve the knowability and testability of numerous game systems. It may also simplify the creation of a debug-network-lock-step-mode to game clients, which enables some valuable debugging and profiling functionality.

Client Gameplay Traffic

The primary source of uncontrolled inputs to League of Legends is the client network traffic driven by player actions. The only viable solution was to record this server network traffic.

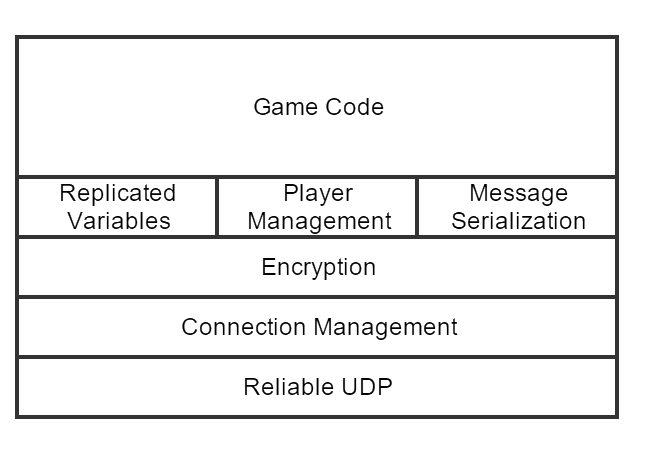

We had several ideas about where we wanted to record the traffic. We could have, for example, recorded the network packets after they’d been decrypted and converted into our native gameplay messages. Capturing at higher levels has some interesting advantages, as we could use the recordings themselves as a kind of human-readable debugging tool. However, we decided it was generally better to make more of the codebase run deterministically rather than less as long as the implementation costs were the same. Therefore, we captured the packets at a somewhat low level in our application stack, but above our low-level network system.

Playing client traffic back was trickier than recording it. This required an adapter between the low-level network system (which is nondeterministic) and the portions of the game that utilize network traffic to drive behavior. This is where some heavy complexity began to set in.

The low-level network layer is not only responsible for packet handling, but it also serves as a source of truth about client connections and status in the game session. This required us to extend the adapter to “fake” the client endpoints, which unfortunately were not well isolated from the rest of the game systems. Early in the process, this coupling between gameplay and low-level client state would regularly cause problems for us, from crashes during playback to subtle divergences based on uncontrolled variables such as client ping.

Server Startup Values

In a given League of Legends match, there are a vast number of settings and permutations, from champions chosen, to runes and masteries, to hidden values like game ids and encryption keys. My back-of-the-napkin math on the number of possible combinations of game settings you can have on on any given patch yielded an absurd number of zeroes. I stopped trying to multiply in new factors after I ran into silly numbers like undecillion.

Clearly, this was something we would need to record. Each server process that runs a single game of League of Legends is started by a service called the Local Server Manager or LSM. The LSM provides command lines and a configuration file called a Megapacket when it launches a new server instance. The Megapacket acts like an enormous command-line extension, containing all of the settings unique to that single execution of the game.

Recording this information was pretty straightforward, since it was already contained in serialized flat files. The trouble started when trying to play back this recording. We found very quickly that our startup process was highly inflexible, and required a fairly deep re-write of our server startup code. This refactor was particularly nerve-racking, since this code had remained relatively unchanged for almost a decade, and negative side-effects from the changes could be subtle.

Random Number Generators

Despite their implication, random numbers generators in most software are already deterministic. Most of what we call random number generators (RNGs) in computing are more accurately called pseudorandom number generators (PRNGs). This is because nearly all random number APIs rely on formulas that “twist” or “shift” a given seed value into another value in a process that always gives the same series of outputs with a given starting seed.

Divergences begin when you can’t control the order, the number of calls, or the initial seed of a random number generator.

The first problem that we identified with PRNGs is that we were using more than one, and some of them were being used by nondeterministic parts of the game server. This forced us to isolate a single controlled PRNG away from the std lib and c-style randoms that were being used in various places through the codebase.

After consulting with several other Rioters, our team settled on developing a new PRNG implementation based on XOR-SHIFT. It’s simple, fast, and has a great distribution, which means it’s more than good enough for gameplay. The main body of work was simply identifying all of the different game-affecting PRNGs in the game and unifying them to use our new API.

The second step was to decide on the nature of the initial seed value. In many newer games, local system time is substituted for a seed so that the RNG can’t be “gamed”. The probability of someone doing this successfully in League is incredibly low, even if we used a fixed seed like most early console games. However, just to be extra safe, we decided to use a globally unique game id as the initial PRNG seed. This value comes from our command line, which we were already recording in the SNR.

Uninitialized Variables

Anyone who has dealt with deterministic software written in C/C++ has probably run across divergences from uninitialized variables at some point. In C++, a primitive type that is not explicitly given an initial value will simply pick up whatever garbage values are stored at its memory address when it was allocated. While these uninitialized variables are benign most of the time, there are scenarios where they leak into the game state, resulting in difficult-to-find divergences between multiple runs.

A particularly egregious code example follows:

class CFoo

{

private:

int32_t bar;

void DoStuff(); //does something that changes game state

public:

void SomeFunction()

{

for(; bar < 10; ++bar)

{

DoStuff();

}

}

};With typical compiler settings, this code won’t throw so much as a compiler warning. In this example, bar is not initialized, so it may contain any value, depending on how it’s allocated. The result is SomeFunction might call DoStuff() anywhere from 0 to 10 times.

To nip this problem in the bud, we started moving to C++11-style inline initialization of member fields wherever possible. This creates a wonderful visual cue to remind you that you should, in general, initialize everything. It’s such a powerful tool that we’re adjusting our internal coding standards to favor inline member initialization over initializer lists in constructors.

Obviously the right course is to force developers to zero-initialize everything all the time, right? This is where things get contentious.

As an example of said controversy, we have a very common struct found in thousands of places in code called Riot::Vector3f. This is a simple 3 dimensional vector containing 3 32-bit floats. This type is used extensively to represent properties like positions, velocities, directions, and other geometric concepts. Individually, the performance hit of forcing a Riot::Vector3f instance to be zero-initialized is tiny, but when considered over the millions of times that these types are constructed in a game, the performance hit would add up. In practice, most usages of these values were very careful to initialize them to known values before using them anyways.

Instead of forcing the issue, we decided to set up our validation tools in such a way that most variables that would end a frame with a nondeterministic value could be caught and spot fixed. This detailed monitoring of values also yielded some interesting dividends: we were able to find and remove large numbers of variables that were never changed at all in the course of a game.

Async Processing

Many game developers have been stymied in their efforts to make a deterministic game simulation loop when working with highly multithreaded engines. Multithreading is common in modern games even if the outer Input/Update/Render loop remains a relatively serial pattern. As complexity has increased over time, games have become increasingly multithreaded and capable of modifying behavior based on the timing of asynchronous tasks.

While we were initially worried about this problem, it turned out our fears were largely unfounded. The server game loop was built from the ground up to be highly efficient on a single thread. While we do support multiple threads in each server process, none of them were discovered to actually change the state of the main thread without deterministically blocking the main thread when they were delayed.

The low-level network code that bucked this trend had been effectively removed from the equation, as we’d decided early on that it wasn’t necessary to make the low-level network deterministic in the first place. We also had an advantage in our tendency to pre-load all of our game data up-front to avoid I/O complexities while the match is playing. Not only does this help us avoid performance hitches from I/O contention, we’re also able to avoid nondeterministic I/O thread races completely.

Nondeterministic System State Leaks

As portions of the League server gamestate were nondeterministic, we needed to ensure those systems did not “leak” into our pristine deterministic game simulation. Like every other section, we’d have to either record or avoid these situations.

An example of a system in the game that we didn’t want to spend the time on was the network layer. This system used multiple threads, changed behavior based on the properties of a network connection, and expected to communicate with a game client (which is a separate process and would also have to be made deterministic). Rather than add in the complexity required to play back an SNR, we decided to adapt the system to provide “fake client” endpoints as described above.

We made a conscious decision to avoid trying to make memory addresses themselves deterministic. It would have forced us to make significant changes to our allocators and systems containing pointers to nondeterministic allocations in the game. We would have also been forced to disable ASLR (Address Space Layout Randomization), which is such an important security feature that it is now enabled by default on Windows, OSX, and Linux. This decision also yielded an opportunity to correct any gameplay behaviors implicitly affected by randomness in our memory addresses, which is rarely a desired outcome.

There was one class of common algorithmic behavior that caused particularly challenging problems relating to memory addresses - pointer comparisons.

Here’s an example of a pointer comparison divergence you can try with any C++ compiler:

#include <stdio.h>

#include <stdint.h>

#include <map>

class CGameObject

{

public:

void Update(int32_t updateIndex)

{

printf("Update ID: %d UpdateIndex: %d\n", id, updateIndex);

}

int32_t id = 0;

};

class CGameMetadata

{

public:

bool shouldUpdate = false;

};

std::map<CGameObject*, CGameMetadata> metadataMap;

void UpdateObjects()

{

int32_t updateIndex = 0;

for (auto& currentPair : metadataMap)

{

if (currentPair.second.shouldUpdate)

{

currentPair.first->Update(updateIndex);

}

++updateIndex;

}

}

int main()

{

for (int32_t i = 0; i < 10; ++i)

{

CGameMetadata meta;

meta.shouldUpdate = i & 1;

CGameObject* obj = new CGameObject();

obj->id = i;

metadataMap[obj] = meta;

}

UpdateObjects();

// Wait for user input to keep the output window around on Windows

getchar();

return 0;

}In this simplified example, there’s no guarantee that objects will be updated in any particular order. When the elements are inserted, they’re sorted into the ordered map, which compares their virtual addresses when determining their position in the map. As a result, each time you run this code, you may see a different update order. Some OSes will reproduce this issue more often than others since the order of the addresses returned from the allocator is an internal implementation detail.

The main problem with this pattern is that it’s very hard to intuit that this causes a divergence by static code inspection. In some cases, game execution is not impacted by the iteration order, and the pattern is acceptable. In other cases, the ordering of these updates might affect behavior. Even though we know there’s a vulnerability here, it may not be obvious whether it results in a divergence at the end of a frame.

To avoid a wild goose chase, we relied heavily on testing to find pointer comparison problems. However, even that yielded complexities. On many OSes, the virtual memory allocation ordering for a single run of the game would be nearly identical between runs. However, we would detect increased incidences of divergences when we recorded on one OS and played back on a different one.

This interesting property leads us to believe that various Windows versions have subtle differences in allocation behavior. On the plus side, without this odd property when migrating between OSes, we might not have caught low-probability divergences until they’d caused a DDR failure.

Conclusion

We anticipate several new workflow and technology improvements to aid our internal development as a result of now having a deterministic server simulation. Other League of Legends developers are regularly discovering new ways to use determinism to improve the quality and speed of their work. The cost to make the game deterministic was quite high, but we will now be able to reap the fruits of this technology indefinitely going forward.

If you’ve got any questions relating to the implementation of determinism, I’d love to hear them!

For more information, check out the rest of this series:

Part I: Introduction

Part II: Implementation (this article)

Part III: Unified Clock

Part IV: Fixing Divergences